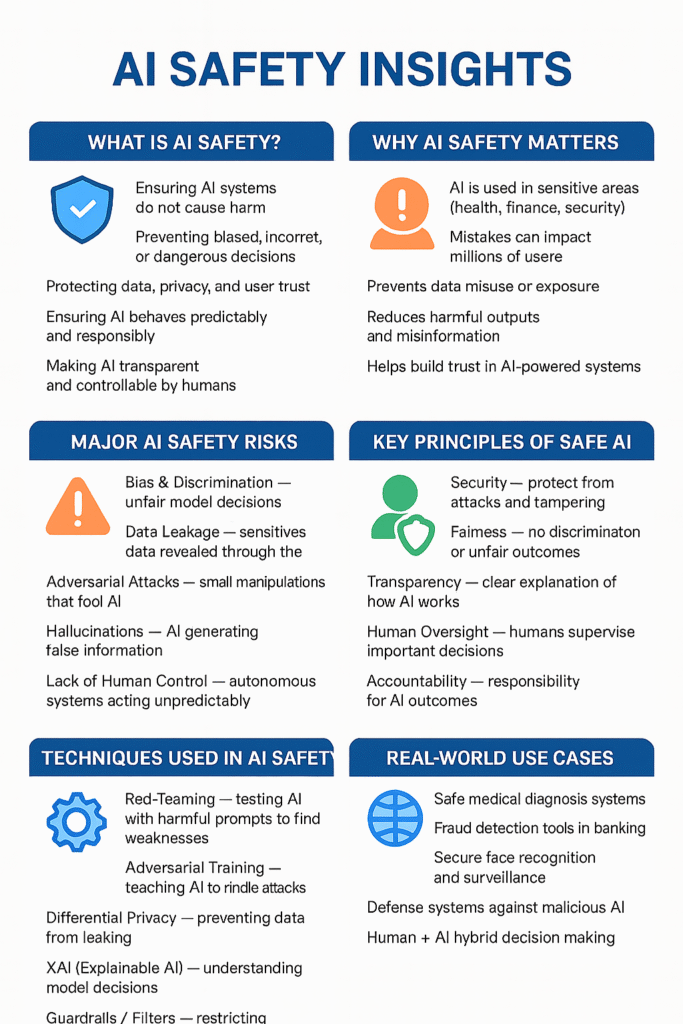

Learn essential AI safety practices, key risks, and simple ways to build secure, fair, and trustworthy artificial intelligence systems.

1. What Is AI Safety?

AI safety is about designing, developing, and using AI in ways that prevent harm. This means ensuring AI:

- Doesn’t make dangerous or biased decisions

- Cannot be easily manipulated

- Protects users’ data and privacy

- Avoids unintended actions or outcomes

- Operates transparently and can be held accountable

Goal: AI should help people, not harm them.

2. Why AI Safety Matters Today

As AI becomes more advanced, the risks increase. Key concerns include:

- Model Bias: AI trained on biased data can make unfair decisions in areas like hiring, lending, or law enforcement.

- Data Leakage: Sensitive training data may be unintentionally exposed.

- Adversarial Attacks: Malicious inputs (like images or text) can trick AI systems.

- Hallucinations: AI can confidently produce false or misleading information.

- Loss of Human Control: Autonomous systems may behave unpredictably without proper oversight.

Objective: AI safety works to prevent these issues before deployment.

3. Key Principles of Safe AI

Safe AI development relies on five core principles:

- Security — Protect AI from attacks and tampering.

- Fairness — Avoid discrimination or unfair outcomes.

- Transparency — Make AI decisions understandable.

- Human Oversight — Ensure humans supervise critical decisions.

- Accountability — Take responsibility for AI outcomes.

4. Core AI Safety Techniques

Experts use several techniques to build safer AI:

- Red-Teaming: Test AI with worst-case scenarios to identify weaknesses.

- Adversarial Training: Teach AI to handle harmful or manipulated inputs.

- Differential Privacy: Protect training data from being reconstructed.

- Explainable AI (XAI): Make AI decisions easy to understand.

- Guardrails & Safety Layers: Use filters, policies, or constraints to control AI behavior.

5. The Future of AI Safety

As AI becomes more integrated into our lives, safety efforts will focus on:

- Detecting harmful content more effectively

- Making AI models easier to interpret

- Strengthening cybersecurity practices

- Creating international AI regulations

- Designing AI ethically and responsibly

INFOGRAPHIC

✅ Conclusion

AI safety is essential because it ensures that intelligent systems behave responsibly. Moreover, strong security practices help prevent misuse, data leaks, and bias-related risks. In addition, clear guidelines allow developers and organizations to build trustworthy AI models. As a result, safer AI helps protect people, businesses, and the digital ecosystem.